During my time at Serverless, Inc., I've talked with a lot of users about their serverless applications. One of the persistent questions that people ask is around which database to use.

Many people reach for DynamoDB as their database of choice, with good reason. It's the most serverless-friendly database, for a number of reasons:

- Accessible via HTTP;

- Fine-grained access controls with IAM;

- Usage-based pricing model;

- Stream-based activity log for updating secondary data stores

These features make DynamoDB a great fit for use with Lambda or even non-Lambda-based serverless solutions like Step Functions or AppSync.

That said, I see a few different patterns on modeling data in DynamoDB with serverless applications. In this post, I want to cover the four patterns I see most often:

- The Simple Use Case

- Faux-SQL

- "All-in NoSQL"

- The True Microservice

Let's review them one-by-one, covering the pros and cons of each.

The Simple Use Case

One of the great things about serverless is how easy it is to build and maintain simple services. In a few hours, you can have a Slack bot or a GitHub webhook handler.

For these simple applications, DynamoDB is a perfect fit. Your data access patterns are pretty limited, so you won't need to go deep on learning DynamoDB. You can handle all of your needs with a single table, often without the use of secondary indexes.

I find these simple use cases to be one of the "gateway drugs" of serverless usage. These apps can be free to run with the AWS Free Tier. They're often internal tools or fun toys, so you don't need to go deep on Lambda internals for optimizing performance or avoiding cold-starts.

While these simple use cases are a great way to learn and provide quick value, it's the production workloads that are most interesting. Let's move on to the next most common pattern I see.

Faux-SQL

In production, you have more complex applications than the simple use case. You have multiple, interrelated data models. For serverless users, it can be a hard choice between:

Traditional SQL database: A data model they know and understand, but a scaling and interface model that doesn't fit within the serverless paradigm.

DynamoDB: A HTTP-based service that scales well but has an unfamiliar data model.

Some people decide to choose parts of both -- use multiple DynamoDB tables and normalize their data. For example, if you were building a blog, you might have two DynamoDB tables -- posts and comments. The comments table would use a postId as the hash key of its primary key to tie the comments to a specific table.

This pattern is not recommended by the DynamoDB team. They have a some great best practices on modeling relational data, but they advise strongly against normalizing your data in a faux-SQL approach.

That said, I see this pattern recommended a lot by the AWS AppSync team. AppSync is a hosted GraphQL service from AWS, and many of the tutorials use a separate DynamoDB table per entity.

Pros of the Faux-SQL approach:

It's familiar to developers. They know how to normalize data. They don't have to learn a complex new data modeling system.

It's easier to put into an external service., Often you will replicate DynamoDB data into a secondary datastore, such as Elasticsearch for searching or Redshift for analytics. Since your data is already normalized, they can be streamed directly into those sources without much modification.

It's easier to add new query patterns in the future. It's not as flexible as a traditional SQL database in this regard as you're still limited by DynamoDB's query patterns, but a normalized structure allows for additional flexibility in the future.

Cons of the Faux-SQL approach

You are making multiple queries for your calls. You should aim to satisfy your needs with a single query.

Your application code just became a database. Rather than doing a JOIN in SQL, you are doing it in code. This means you are responsible for maintaining referential integrity and for optimizing your queries.

You know what's really good at maintaining referential integrity and optimizing JOINs? A relational database.

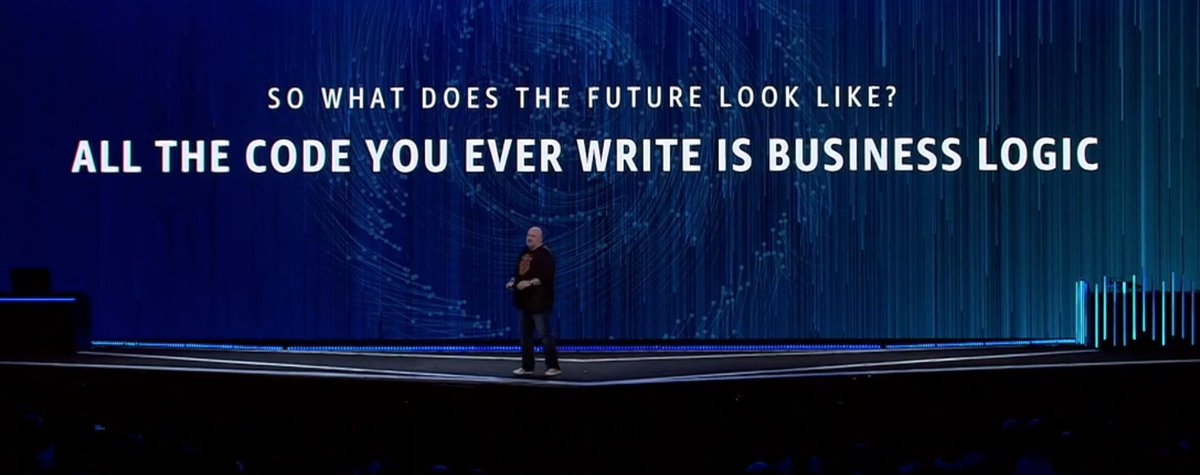

If the promise of serverless is that the only code you write is your business logic, why are you replicating half of an RDBMS in your application code?

If you want a flexible, normalized data system, use a traditional RDBMS + SQL. If you want to use DynamoDB, lean in to the NoSQL mindset.

This brings us to our next pattern: going "all-in" on NoSQL.

"All-in NoSQL"

The third DynamoDB pattern I see is the "All-in NoSQL" model. In this pattern, people take the time to learn and understand NoSQL generally and DynamoDB specifically.

They don't fight NoSQL. They embrace it.

The best way to get up to speed on this is to watch the Advanced Design Patterns for DynamoDB talk from re:Invent 2018 by database rockstar Rick Houlihan. Based on the Twitter chatter I saw this year, he was the breakout favorite from re:Invent.

Feel completely schooled (in the best way) by Rick Houlihan's talk about DynamoDB, but also general NoSQL data modeling practices from #reinvent2018. Already online! https://t.co/OwhN4xzkSV Highly recommended if you're open to 🤯

— Josh Barratt (@jbarratt) November 29, 2018

One of the more important points Rick makes is that "NoSQL is not flexible. It's efficient but not flexible."

"NoSQL is not flexible. It's efficient but not flexible." -- Rick Houlihan

NoSQL databases are designed to scale infinitely with no performance degradation. They're able to be efficient because they are perfectly tailored for your queries.

Unlike a SQL database, you won't design your tables first and then see what questions you need to answer. You map out our query patterns up front, then design your DynamoDB table around it.

You will need to learn how DynamoDB works.

You will need to overload your secondary indexes.

It will be extra work upfront.

If your application needs to scale, it will be worth it.

Pros of the "All-in" NoSQL approach

Your queries will be efficient. You should be able to satisfy any access pattern with a single query. Your database won't be your application bottleneck.

You are built for scale. Your application should scale infinitely without any performance degradation. You won't need to rearchitect because of data access concerns.

Cons of the "All-in" NoSQL approach

It's unfamiliar to your developers. Most developers have a good understanding of modeling for SQL databases. Modeling for NoSQL is a different world, and it can take some time to get up to speed.

It's more work upfront. You have to do the hard work up front to really think about your data access patterns and how you will satisfy them.

It's less flexible. If you want to add new access patterns in the future, it may be impossible without a significant migration. You should account for this possibility.

It's harder to replicate into a secondary source. If you want to stream your DynamoDB data into something like Elasticsearch for search or to Redshift for analytics, you will need to do heavy transformations to normalize it before sending it to the downstream systems.

When done right, the "All-in NoSQL" approach can be wonderful. The key is taking the time to do it right.

Finally, let's take a look at a fourth pattern -- the True Microservice.

The True Microservice

The previous two patterns -- faux-SQL and "All-in NoSQL" -- are strategies for when you have a complex data model with multiple, interrelated entities. In this pattern, the True Microservice, we will see how to get all of the benefits of DynamoDB with none of the downsides.

Microservices are one of the bigger trends in software development in recent years. Many people are using serverless applications to build a microservice architecture.

When building microservices, you should aim that each microservice aligns with a bounded context. Further, you should avoid synchronous communication with other services as much as possible. This is particular true in Lambda where, as Paul Johnston says, "functions don't call other functions". The added latency and dependency issues will hurt the benefits you want from microservices.

My favorite use case for Lambda + DynamoDB-based microservices is basically implementing the principles from Martin Kleppmann's talk, Turning the Database Inside Out. In it, Martin describes a new way to think about data in an event-centric world. Rather than using the same, single datastore for all of your reading + writing needs, you use purpose-built views on your data that are tailored to a particular use case.

Let's see how this might fit in a serverless architecture. Your microservice is likely getting its data asynchronously from an upstream source. This could be from an SQS queue, a Kinesis stream, or even the stream of a DynamoDB table from a different service. The upstream source will trigger a writer function that saves the data to DynamoDB.

All web requests to your service are read-only requests. This service is handling one or maybe two data entities, so your datastore is perfectly tailored to serve these read requests.

Pros of the True Microservice

Simple, straight-forward data modeling. Because you are working with a limited domain, you don't need to get into advanced data modeling work with DynamoDB.

Fast, scalable queries. You get all the benefits of DynamoDB's low response times with infinite scaling.

Easy to stream into secondary sources. The data model is straight-forward, so you don't need to do as much work to normalize when sending to Elasticsearch or Redshift.

Cons of the True Microservice

Not always possible. It may be difficult to whittle each portion of your application into a small, contained microservice. As Rick Houlihan says in his talk, "All data is relational. If it wasn't, we wouldn't care about it." For the core of your application, you may have multiple related entities that you cannot break into independent services without relying on expensive service-to-service calls.

Eventual consistency issues. If you are populating your data asynchronously from upstream sources, you can run into consistency issues. This can be confusing to users of your application. Not all True Microservices use the asynchronous writer pattern, but many do.

The True Microservice is the purest serverless application, and I highly recommend using it when you can. The logic is straight-forward due to the limited domain. Scaling is easy and automatic, and it's easy to see how much the service is costing you on your bill.

Conclusion

DynamoDB is a great database and a perfect fit for many serverless applications. In this post, we covered the four main patterns I see with DynamoDB and their pros and cons.

I'd love to hear about how you are using DynamoDB or about which other questions you have on DynamoDB. Hit me up on Twitter or via email if you want to chat!